Digital Identity Facebook App

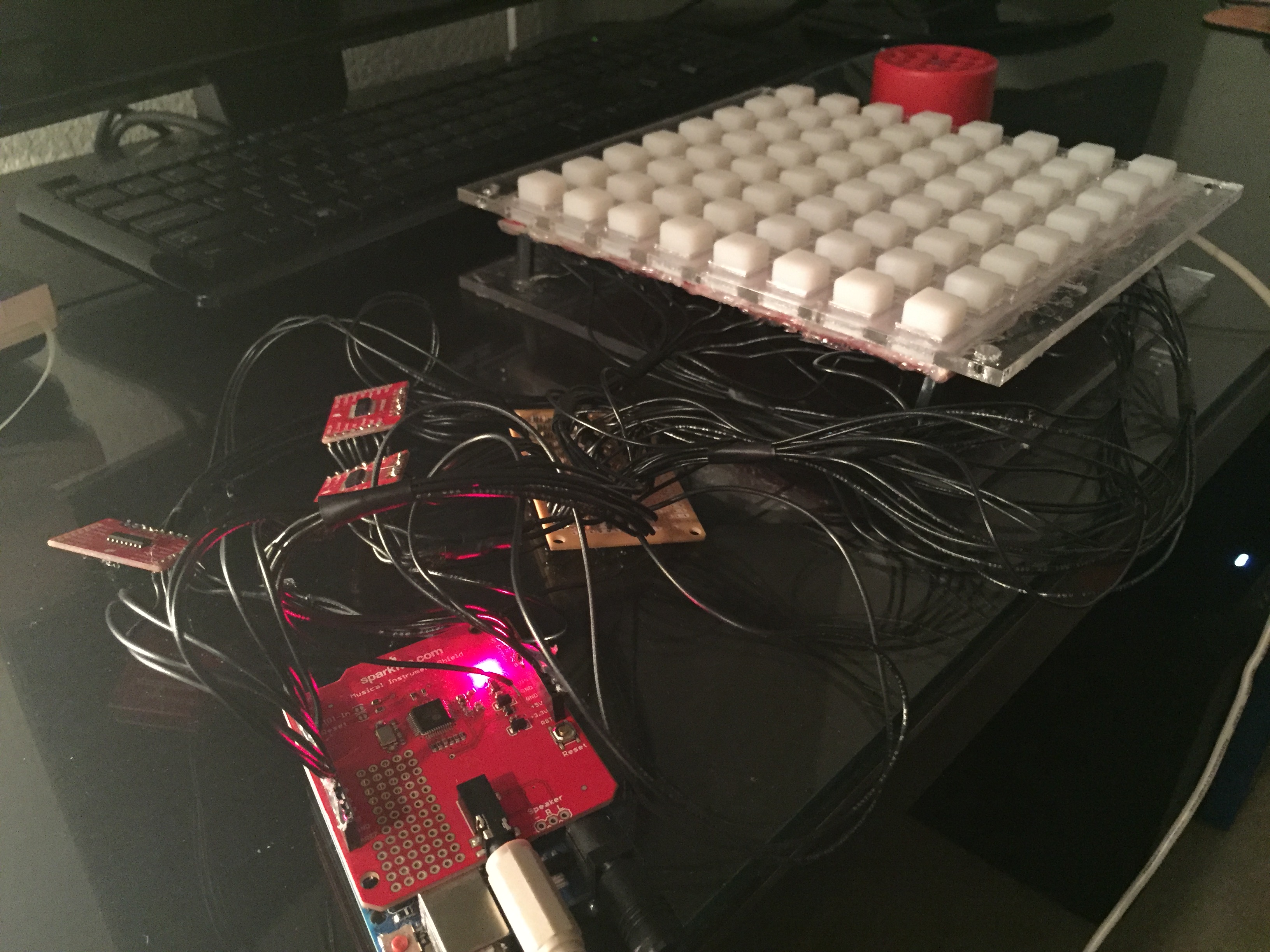

Software

Image Processing software written in Python

Facebook App website written in PHP

Google Engine used for parallel processing of images

Description

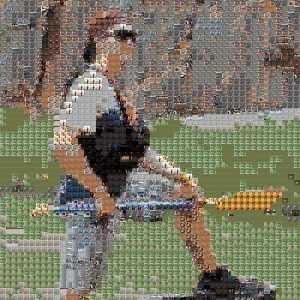

Everyone who has a Facebook knows that the profile picture is the most important picture in all of Facebook. In fact, it's the only public picture available for all Facebook users to see. Every user meticulously chooses their profile picture, because it holds so much importance to them.

Herein lies the problem... How could you possibly choose a picture that fully describes all that you are when you're limited to a single picture? And yet, we all consciously make that evaluation. There's a reason your profile picture holds so much value to you.

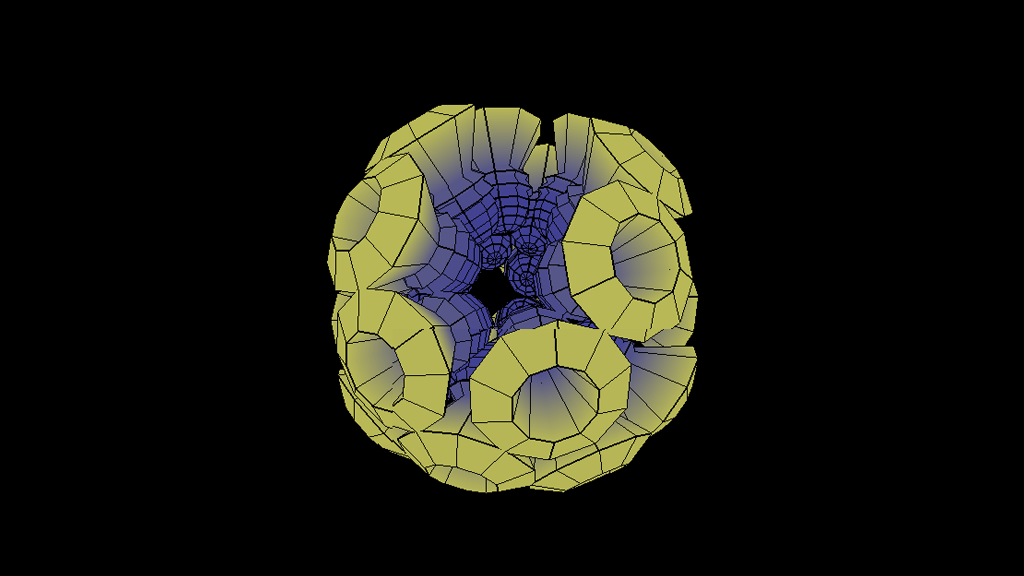

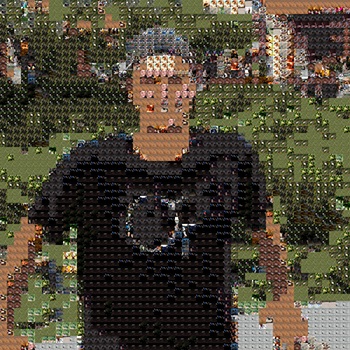

Digital Identity aims to bridge this complex gap. Using all of your Facebook photos, and the photos in which you have been tagged, this app will recreate your profile picture. The resultant photo resembles your profile picture (again, it's the most important picture you have); except, now your photo is made up of all of your other photos. Your new photo carries the weight and importance of your profile picture, but now it more fully expresses your digital presence by using all of the other photos available to you on your Facebook. We call this your Digital Identity!

Engineering of Photomosaics

In order to create a Digital Identity, there are two important pieces of input that any software solution will need. The profile picture, and an image library (the Facebook user's other photos). Each photo in the image library will need to be analyzed and characterized for a later image matching stage. Here is how the analysis works:

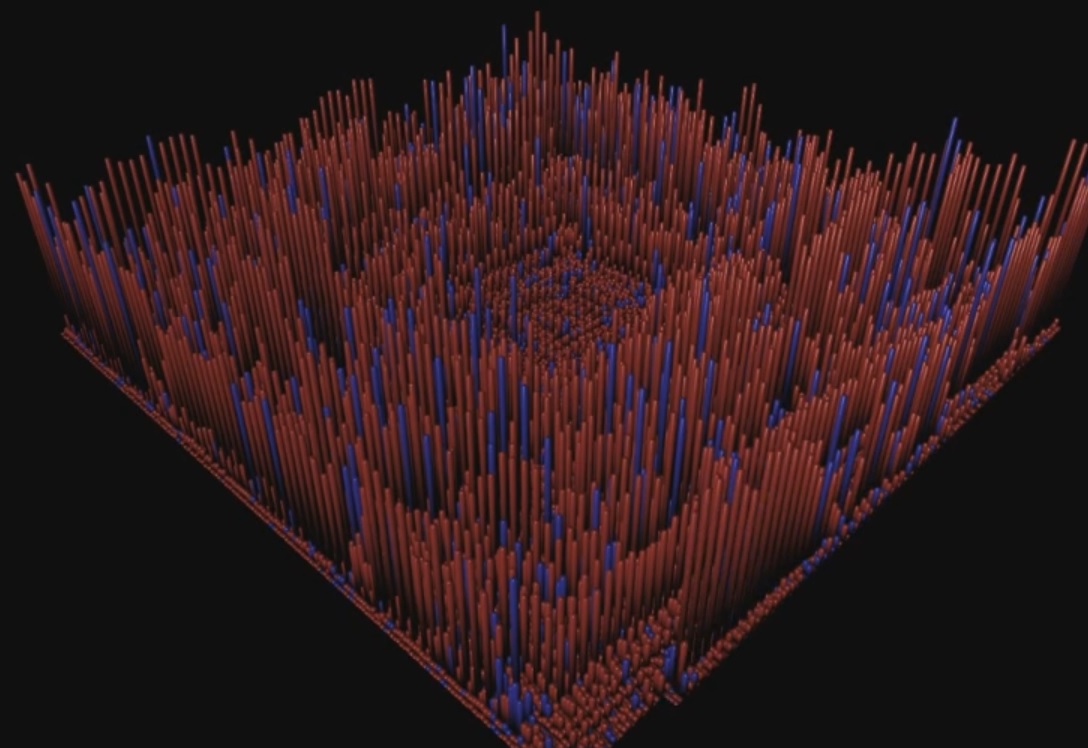

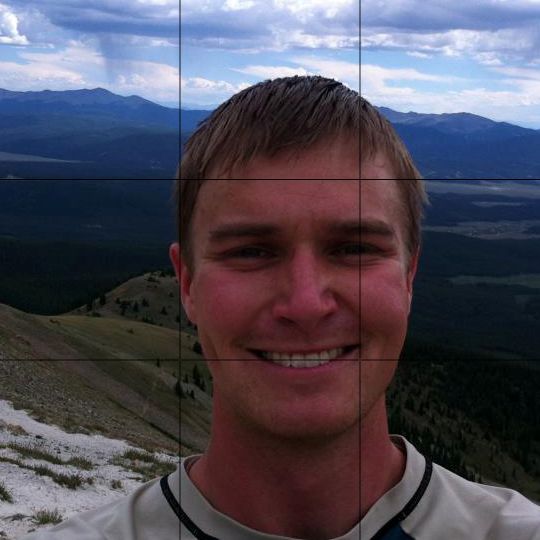

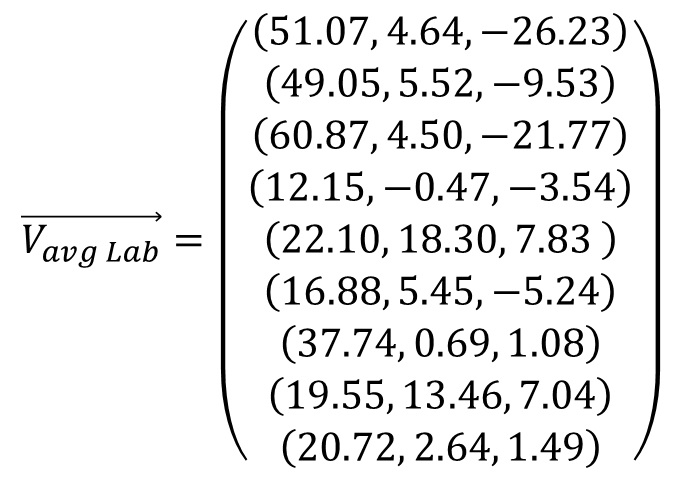

Each image in the user's photo library is segmented into nine separate locations. In each of these locations, an average color is calculated. Colors can be represented in many different ways, depending on the color model. For this project, I decided to convert all of the RGB color values into the CIELAB color space before taking an average. I'll discuss this decision in more detail in just bit. Finally, these values are saved as a color vector of L, A, and B values corresponding to the nine average colors in the image. This process is repeated for all of the images in the image library.

At this point, it's time to start working on the profile picture. We start by breaking the profile picture into lots of little segments.

The same image analysis technique that was used on the library's photos is applied to each segment of the profile picture. Therefore, each segment will have an associated color vector specifying the average color at the nine sub-sections of each segment.

The final step is an image matching step. Each segment in the profile picture is compared against every other image in the image library. The image that best matches the segment in profile picture is re-sized. Finally it replaces the profile pictures segment.

Color matching

How do you know mathematically that given a specific color, one color is a better match than a second? For example, given a shade of dark green, how do we know if a dark blue is a better match than a dark purple? Colors are typically stored in Red, Green, and Blue components (RGB). This has do with the hardware technology that captures and displays photos. However, human eyes are more sensitive to some colors than they are others. For this reason, we transform the colors from RGB to XYZ color space. XYZ color space is based on the tristimulus response of rods in human eyes. Finally, the colors are then transformed from XYZ to LAB were there is a "uniform perceptual distance" between colors. At this point, we can calculated the "distance" between colors using the distance formula. For this project, we used a slightly different metric called "Delta E 94" which is slightly more accurate.

Image matching

Since each image is made of nine sub-sections, during the image matching phase we calculate the "distance" between colors at each sub section. This leaves us with a nine dimensional vector of color distances. The best image match will be the image vector that minimizes the color distances across all nine dimensions. We used the Euclidean L-2 norm as the distance metric. We could have calculated a single average color for each segment of the profile picture, but by splitting each segment into nine sub-sections, we are able to create better spacial matches between images.

Design Expo Poster: Digital Identity Poster