3D Video Conference

Software

Written in C with the OpenGL and OpenNI libraries

GLSL Shaders

Networking via TCP Sockets

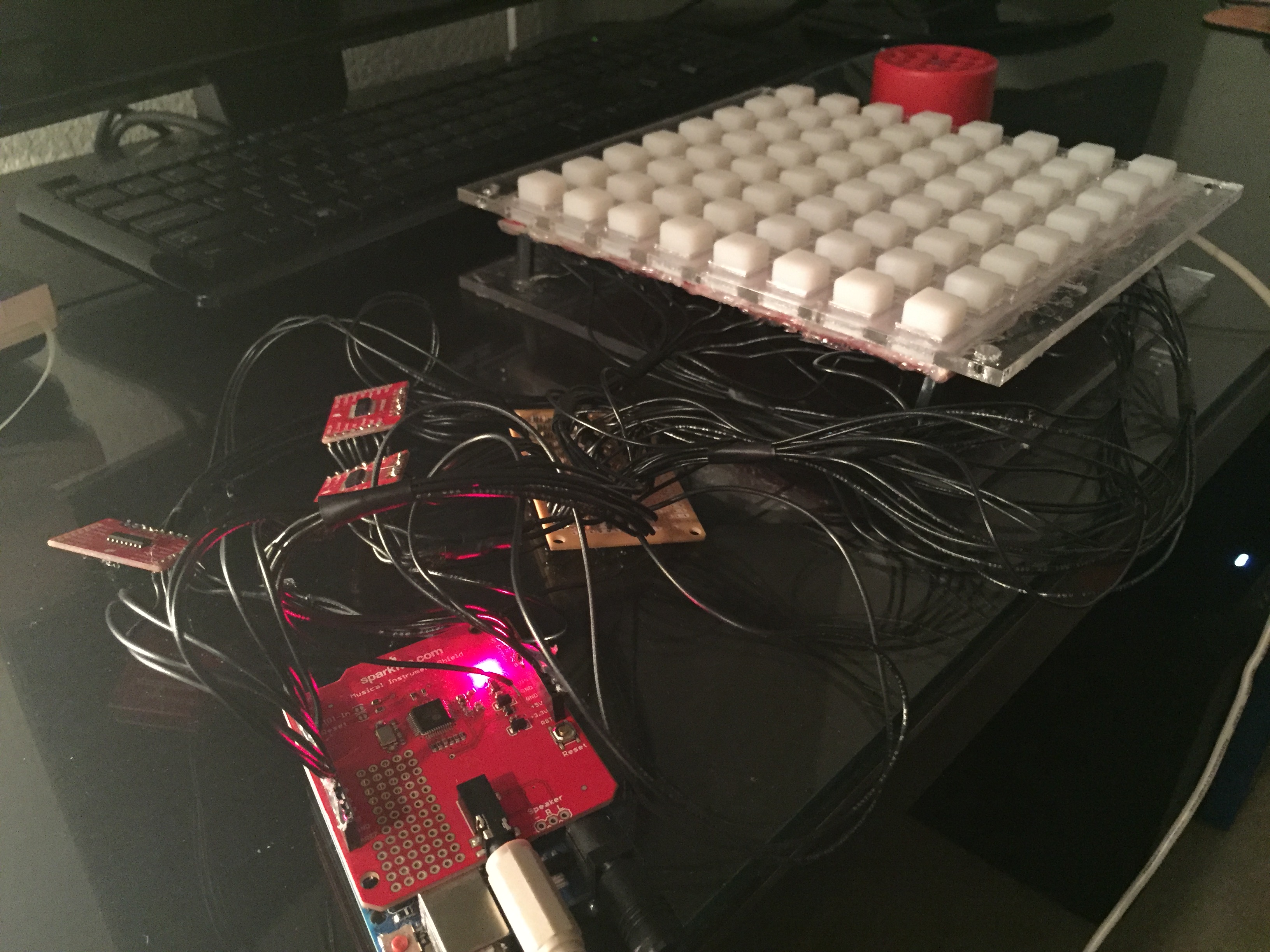

XBox 360 Kinect Cameras

Description

Leveraging the technology of an Xbox 360 Kinect (3D camera), I created a video chat application that displays each participant in 3D. The program can output the 3D images as anaglyphs, or in a format that can be used with a 3D monitor.

Technical Details

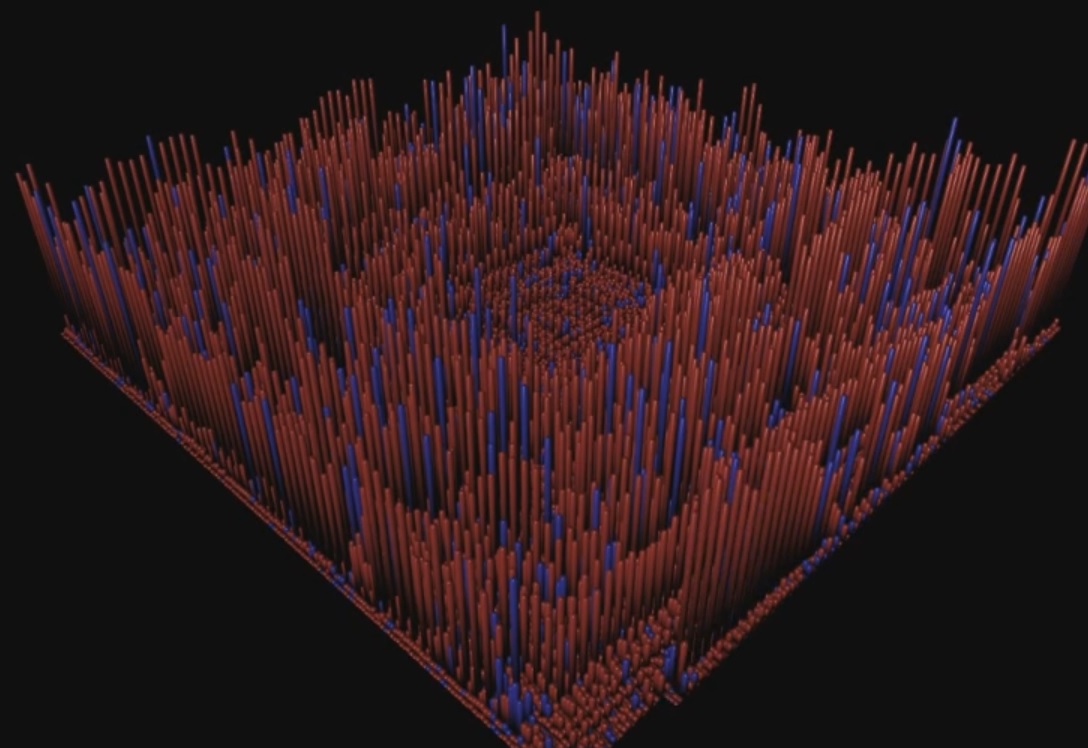

The first challenge in this program was to obtain a 3D image. There are many different techniques for calculating the depth of a scene. It is an entire field of research called "stereo vision". I chose to use an Xbox 360 Kinect, which projects infrared dots all around the room. An IR sensor is then able pick up the dot locations and approximate depth in the scene. Finally, a simple color camera then snaps a picture which corresponds to the 3D information. I used the OpenNI library to control the camera, and merge depth and color information together at each pixel. The information has to be adjusted, because the depth sensor and color camera capture slightly different viewpoints of information. The final output of all of this work is a colored point cloud.

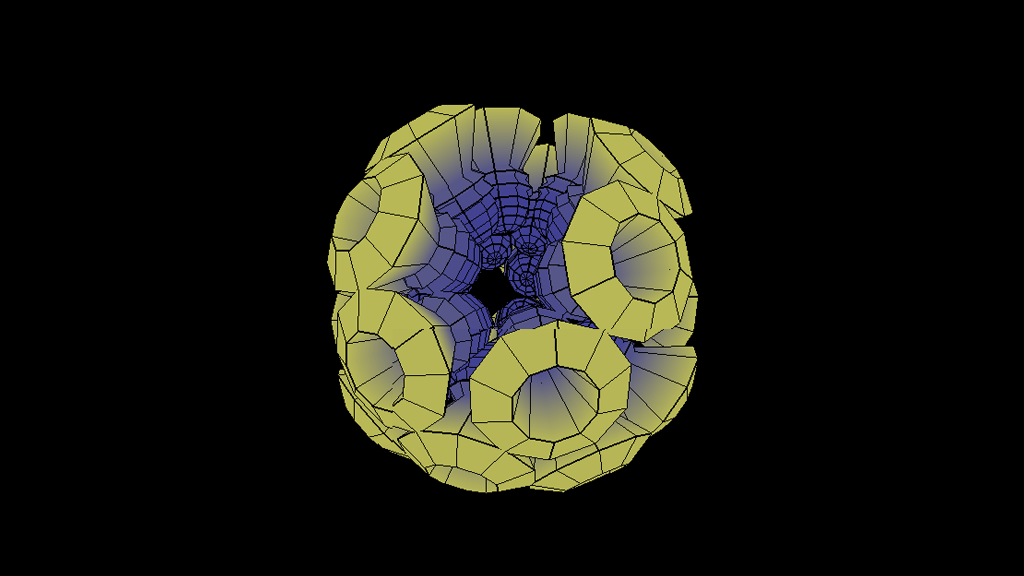

Given the point cloud, I needed a way of displaying this information to the user. I built a program using the OpenGL library to render the scene in 3D. OpenGL runs very quickly because it interfaces with the computer system's graphics card. At this point in the development process, I was able to capture a 3D video feed, and visualize the output.

In order produce 3D images, I needed to render the scene twice. The first rendering would be from the left eye's perspective, and the second rendering would be from the right eye's perspective. I developed a virtual stereo camera in OpenGL that can take care of all these details.

3D monitors produce 3D images by polarizing each column in the screen to be viewed by either the left eye or the right eye with special stereo glasses. In order to produce the 3D effect, I needed to write a set shaders that would interleave the left and right images column by column.

The final effect is really quite striking.The final step in this project was to create a connection between the two computers to share this 3D information. I used a simple client/server architecture with TCP sockets to transfer the 3D point clouds between the computers. Each computer would then locally render the point cloud received from the other party's Xbox Kinect. This part of the process has lots of room for improvement. However, as a proof of concept, it is pretty cool. I think I could speed things up drastically by creating a protocol that allowed each user to control the other user's camera settings. The images would be rendered on each computer locally, and then the final images would be transferred.